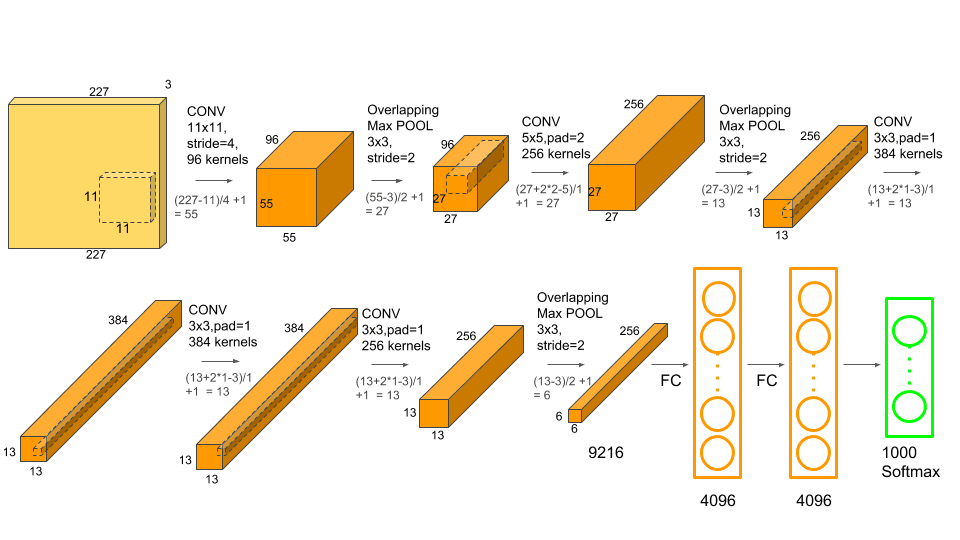

Alexnet

AlexNet is the first deep architecture which was introduced by Alex Krizhevsky and his colleagues in 2012. It was designed to classify images for the ImageNet LSVRC-2010 competition where it achieved state of the art results. It is a simple yet powerful network architecture, which helped pave the way for groundbreaking research in Deep Learning as it is now.You can read more about the model in original research paper here

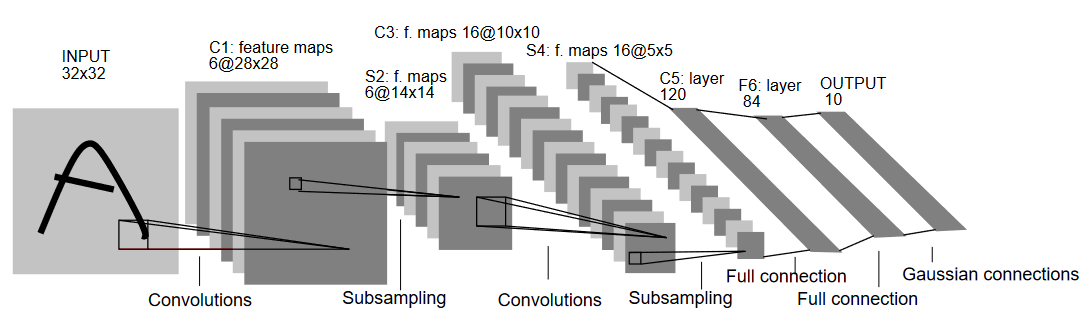

LeNet-5

LeNet-5 is introduced by Yann LeCun, Leon Bottou, Yoshua Bengio and Patrick Haffner in the year 1998 in the paper Gradient-Based Learning Applied to Document Recognition. LeNet is a classic convolutional neural network employing the use of convolutions, pooling and fully connected layers. It was used for the handwritten digit recognition task with the MNIST dataset.