import numpy as np

import torch

import torch.nn as nn

from torchvision import datasets

from torchvision import transforms

from torch.utils.data.sampler import SubsetRandomSampler

# Device configuration

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')Architecture

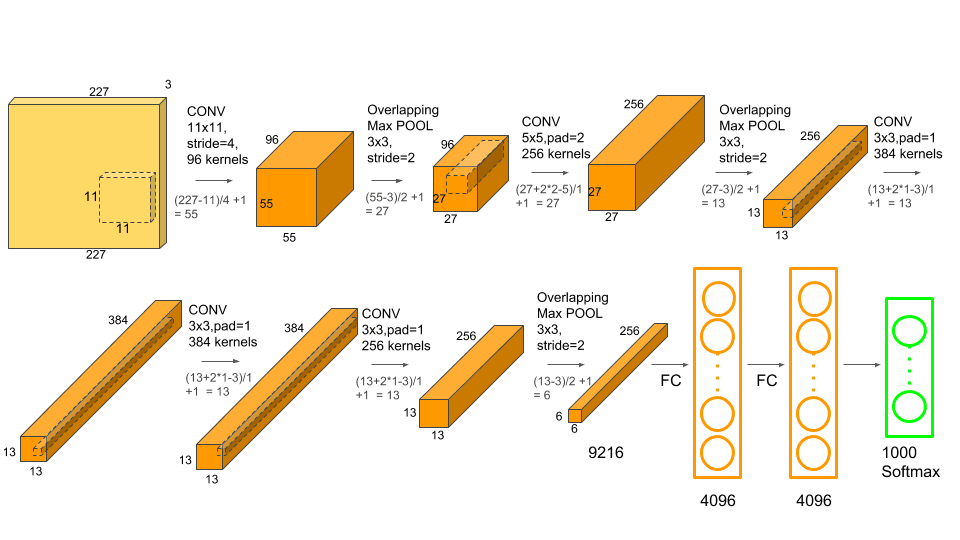

Unlike LeNet-5 the famous Alexnet network was operated with 3-channel images which were (224x224x3) in size. It also used max pooling with ReLU activations when subsampling. The kernels used for convolutions were either 11x11, 5x5, or 3x3 while kernels used for max pooling were 3x3 in size. It classified images into 1000 classes. It also utilized multiple GPUs.

Data Loading

Dataset

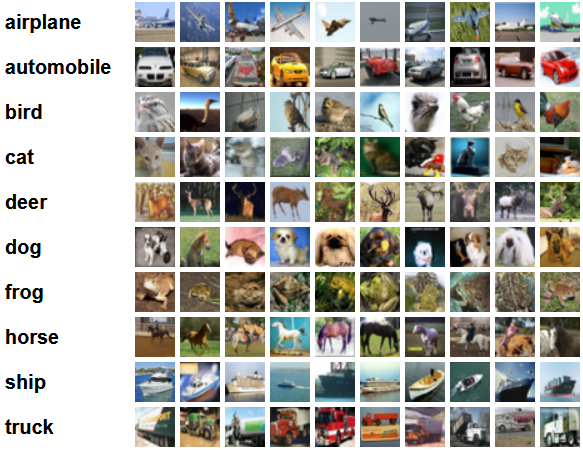

Let’s start by loading and then pre-processing the data. For our purposes, we will be using the CIFAR10 dataset. The dataset consists of 60000 32x32 colour images in 10 classes, with 6000 images per class. There are 50000 training images and 10000 test images.

Classes in the dataset are completely mututally exclusive. There is no overlap.

importing the libraries

Let’s start by importing the required libraries along with defining a variable device, so that the Notebook knows to use a GPU to train the model if it’s available.

Loading the dataset

Using torchvision (a helper library for computer vision tasks), we will load our dataset. This method has some helper functions that makes pre-processing pretty easy and straight-forward. Let’s define the functions get_train_valid_loader and get_test_loader, and then call them to load in and process our CIFAR-10 data

def get_train_valid_loader(data_dir,

batch_size,

augment,

random_seed,

valid_size=0.1,

shuffle=True):

normalize = transforms.Normalize(

mean=[0.4914, 0.4822, 0.4465],

std=[0.2023, 0.1994, 0.2010],

)

# define transforms

valid_transform = transforms.Compose([

transforms.Resize((227,227)),

transforms.ToTensor(),

normalize,

])

if augment:

train_transform = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize,

])

else:

train_transform = transforms.Compose([

transforms.Resize((227,227)),

transforms.ToTensor(),

normalize,

])

# load the dataset

train_dataset = datasets.CIFAR10(

root=data_dir, train=True,

download=True, transform=train_transform,

)

valid_dataset = datasets.CIFAR10(

root=data_dir, train=True,

download=True, transform=valid_transform,

)

num_train = len(train_dataset)

indices = list(range(num_train))

split = int(np.floor(valid_size * num_train))

if shuffle:

np.random.seed(random_seed)

np.random.shuffle(indices)

train_idx, valid_idx = indices[split:], indices[:split]

train_sampler = SubsetRandomSampler(train_idx)

valid_sampler = SubsetRandomSampler(valid_idx)

train_loader = torch.utils.data.DataLoader(

train_dataset, batch_size=batch_size, sampler=train_sampler)

valid_loader = torch.utils.data.DataLoader(

valid_dataset, batch_size=batch_size, sampler=valid_sampler)

return (train_loader, valid_loader)

def get_test_loader(data_dir,

batch_size,

shuffle=True):

normalize = transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225],

)

# define transform

transform = transforms.Compose([

transforms.Resize((227,227)),

transforms.ToTensor(),

normalize,

])

dataset = datasets.CIFAR10(

root=data_dir, train=False,

download=True, transform=transform,

)

data_loader = torch.utils.data.DataLoader(

dataset, batch_size=batch_size, shuffle=shuffle

)

return data_loader

# CIFAR10 dataset

train_loader, valid_loader = get_train_valid_loader(data_dir = './data', batch_size = 64,

augment = False, random_seed = 1)

test_loader = get_test_loader(data_dir = './data',

batch_size = 64)AlexNet from Scratch

class AlexNet(nn.Module):

def __init__(self, num_classes=10):

super(AlexNet, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(3, 96, kernel_size=11, stride=4, padding=0),

nn.BatchNorm2d(96),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 3, stride = 2))

self.layer2 = nn.Sequential(

nn.Conv2d(96, 256, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 3, stride = 2))

self.layer3 = nn.Sequential(

nn.Conv2d(256, 384, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(384),

nn.ReLU())

self.layer4 = nn.Sequential(

nn.Conv2d(384, 384, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(384),

nn.ReLU())

self.layer5 = nn.Sequential(

nn.Conv2d(384, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 3, stride = 2))

self.fc = nn.Sequential(

nn.Dropout(0.5),

nn.Linear(9216, 4096),

nn.ReLU())

self.fc1 = nn.Sequential(

nn.Dropout(0.5),

nn.Linear(4096, 4096),

nn.ReLU())

self.fc2= nn.Sequential(

nn.Linear(4096, num_classes))

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

out = out.reshape(out.size(0), -1)

out = self.fc(out)

out = self.fc1(out)

out = self.fc2(out)

return outSetting Hyperparameters

num_classes = 10

num_epochs = 20

batch_size = 64

learning_rate = 0.005

model = AlexNet(num_classes).to(device)

# Loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate, weight_decay = 0.005, momentum = 0.9)

# Train the model

total_step = len(train_loader)Training

total_step = len(train_loader)

for epoch in range(num_epochs):

for i, (images, labels) in enumerate(train_loader):

# Move tensors to the configured device

images = images.to(device)

labels = labels.to(device)

# Forward pass

outputs = model(images)

loss = criterion(outputs, labels)

# Backward and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

print ('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}'

.format(epoch+1, num_epochs, i+1, total_step, loss.item()))

# Validation

with torch.no_grad():

correct = 0

total = 0

for images, labels in valid_loader:

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

del images, labels, outputs

print('Accuracy of the network on the {} validation images: {} %'.format(5000, 100 * correct / total))